Model Context Protocols (MCPs) in Generative AI: Current State and Future Impact

Introduction

Generative AI systems have grown remarkably powerful at producing text, code, and other content. However, a longstanding challenge has been providing these models with context – access to up-to-date data, tools, and environments beyond their static training knowledge. Historically, hooking an AI model to external sources (files, APIs, databases, etc.) required brittle, custom integrations or one-off plugins for each source, making it hard to scale and maintain. Model Context Protocols (MCPs) have emerged as a solution to this problem. In late 2024, Anthropic introduced the Model Context Protocol (MCP) as an open standard to “bridge AI assistants with the world of data and tools”. Since then, MCPs have gained rapid traction across the tech industry as a way to standardize how large language models (LLMs) and other generative AI systems interact with external context. This report provides a deep dive into MCPs – explaining their technical architecture and functionality, highlighting key initiatives (from OpenAI, Anthropic, Hugging Face, and others), and examining how companies globally are adopting MCPs for real-world applications. It also discusses the future impact of MCPs on making AI more scalable, modular, and context-aware.

Technical Foundations of Model Context Protocols

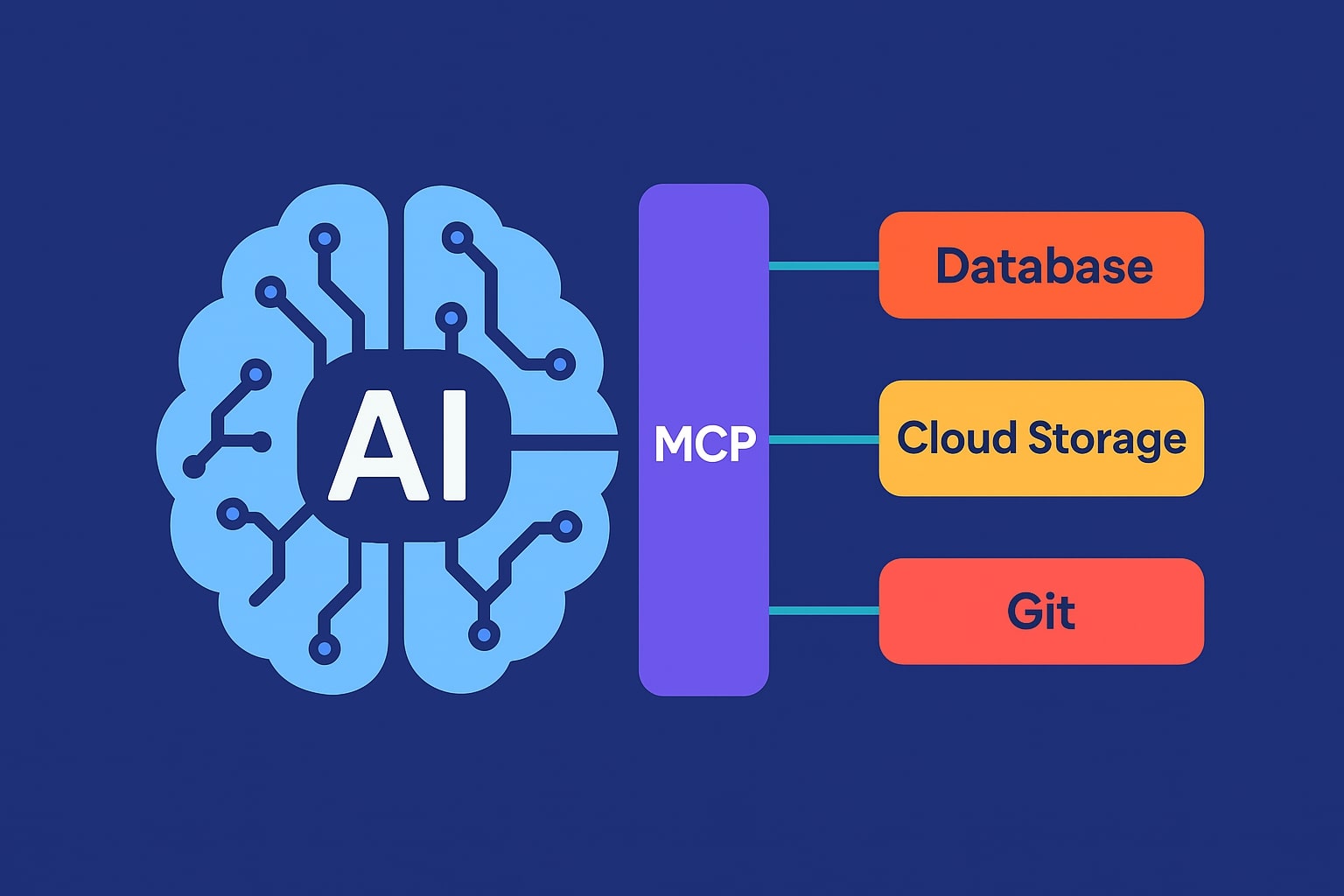

What are MCPs? At its core, an MCP is an open protocol that standardizes how AI applications provide context to and receive data from large models. Anthropic likens MCP to a “USB-C port for AI applications” – a universal connector for AI models to plug into various data sources and tools. In practical terms, MCP defines a common language and message format for AI assistants (or agents) to communicate with external systems. Instead of each AI integration speaking a different dialect, MCP provides one consistent interface. This dramatically simplifies development: an AI agent only needs to speak MCP, and it can then interact with any number of tools that also support MCP. As a result, adding new capabilities (e.g. accessing a database or calling an API) no longer means writing a custom integration from scratch – it just means connecting another MCP-compatible module.

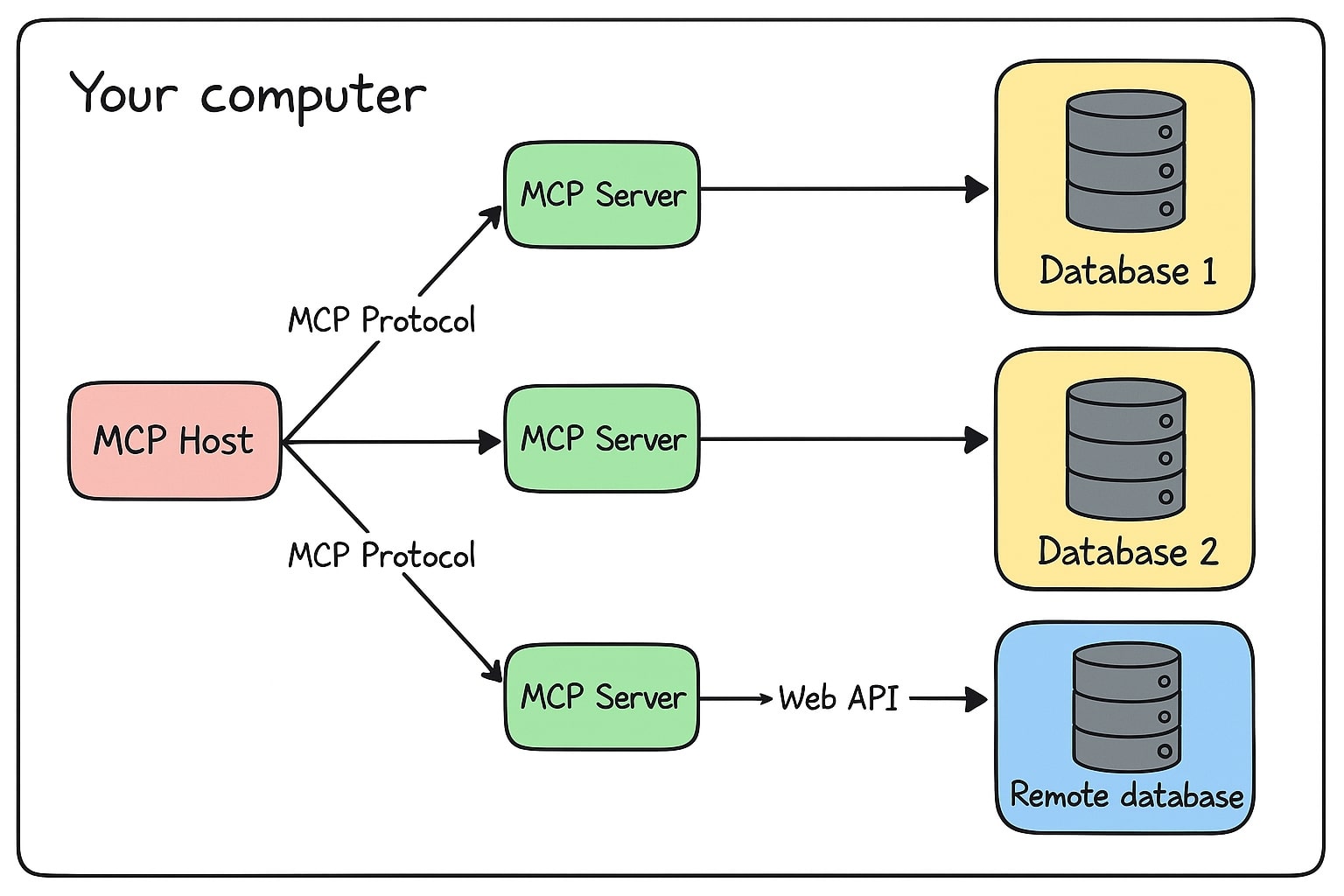

Architecture and Components: MCP adopts a flexible client–server architecture. An AI application (for example, a chat assistant or coding Copilot) functions as an MCP host, which contains an MCP client component. The MCP client is responsible for managing connections to one or more MCP servers. Each MCP server is a lightweight program that exposes a specific external capability (a data source or tool) through the standardized protocol. For instance, one MCP server might provide access to a file system, another to a database, another to an email API – each acting as a secure adaptor for that resource. The MCP client (inside the host app) initiates a connection to each server it needs and communicates via the MCP protocol Figure: The MCP architecture below illustrates this pattern, where a single AI host connects to multiple servers that each interface with different data sources:

Under the hood, MCP communication is built on a message-passing layer using JSON-RPC 2.0 format. The protocol defines standard message types – Requests (with parameters, expecting a result), Results (successful responses), Errors (error responses), and Notifications (one-way messages that don’t expect a reply). This design is reminiscent of familiar standards like the Language Server Protocol, enabling robust request/response interactions. MCP supports multiple transport mechanisms for transmitting these messages. Initially it defines two modes: a Stdio transport (where the server runs as a local subprocess and communicates via standard input/output) and an HTTP+SSE transport (where the server runs remotely, using HTTP POST for requests and Server-Sent Events for streaming responses). In both cases, the message content is the same JSON-RPC structure; only the channel differs. This means an AI agent can talk to a local tool or a cloud service uniformly via MCP. The connection lifecycle involves a simple handshake (exchange of protocol version and capabilities) and then free exchange of requests or notifications in either direction. The MCP client and server can each initiate calls, allowing two-way interaction – e.g. the AI can ask a tool for data, or a tool can send the AI an update.

Integration with LLMs and Multimodal Systems: Crucially, MCP by itself is model-agnostic – it doesn’t assume any specific ML model. Instead, it provides a channel through which a generative model can request information or actions. In practice, current large language models make use of MCPs via their function-calling / tool-use abilities. Modern LLMs (OpenAI GPT-4, Anthropic Claude, Google’s Gemini, etc.) support a paradigm where the model can invoke functions during a conversation. MCP leverages this by informing the model (through the AI host’s prompting) what “tools” are available from connected MCP servers. For example, OpenAI’s Agents SDK will call list_tools() on each MCP server and include those tool definitions in the prompt, so the LLM knows it can call a function (like searchDocument() or getFile()) provided by an MCP server. When the LLM decides to use one, the host’s MCP client will actually invoke that tool via the MCP protocol and return the result to the model. This mechanism effectively extends the model’s capabilities modularly: instead of training one giant model that knows everything, the model can delegate subtasks to specialist modules. The concept applies beyond text as well – for instance, a text-based LLM could have an MCP server that does image recognition (turning an image into a description) or one that controls a robot arm. In fact, MCP is inherently multimodal-friendly because tools can handle non-text data; an AI agent could call a vision API, a speech synthesizer, or IoT sensor via MCP just as easily as calling a text query. This opens the door for composite AI systems (where an LLM orchestrates calls to vision, audio, or other modality-specific models through MCP).

Scalability, Modularity, and Fine-Tuning Support: By introducing a uniform interface, MCP greatly improves scalability and modularity of generative AI deployments. Developers can mix and match MCP servers to compose complex functionality, rather than trying to cram all knowledge into one model. Need more capabilities? Just spin up a new server for that domain. This modular design supports scalability in that each server can be developed, updated, or scaled (horizontally) independently. Some servers might run locally for low-latency access (e.g. a filesystem server), while others run as robust cloud services (e.g. a database query engine serving many users). MCP’s open standard also enables switching out the model itself with minimal fuss – since the protocol to the data/tools remains the same, one could use OpenAI’s GPT today and swap to an open-source LLM tomorrow as the host, without rewriting all integrations. In terms of fine-tuning, MCPs actually mitigate the need to fine-tune giant models on every dataset. Rather than fine-tuning an LLM on proprietary data (which can be costly and slow), an organization can keep their sensitive data in a database or vector store and let the model query it via MCP. The model can remain general-purpose, and the MCP servers deliver contextual data on demand to specialize the model’s responses. This dynamic approach can be seen as an alternative to extensive fine-tuning: the model learns how to use tools, and the tools provide the latest knowledge or actions. Of course, models might still be fine-tuned for better tool-use or reasoning abilities, but they no longer need to embed all world knowledge. Overall, MCP adds a layer of abstraction that cleanly separates the concerns of reasoning (handled by the LLM) and knowledge access/action (handled by MCP servers). This separation yields a more maintainable and scalable generative AI system.

Key Initiatives and Open-Source Ecosystem

Since its introduction, the Model Context Protocol has quickly become a collaborative effort in the AI community, with major AI organizations and open-source contributors rallying to build out the MCP ecosystem.Below we highlight some key initiatives and players:

-

Anthropic (Originator of MCP): Anthropic open-sourced the MCP specification and reference SDKs in late 2024. The specification (available on GitHub and a dedicated site) defines the protocol in detail, and Anthropic provides SDKs in multiple languages (Python, TypeScript/JS, Java, Kotlin, C#) to make it easy to implement MCP clients or servers. Alongside the spec, Anthropic released a suite of open-source MCP server implementations for common tools and data systems. These include connectors for Google Drive (file storage), Slack (messaging), GitHub and Git (code repositories), PostgreSQL (database), Puppeteer (web browser automation), and more. By kick-starting an open library of MCP servers, Anthropic aimed to seed the ecosystem with useful integrations that developers can readily plug into their AI applications. Anthropic also built MCP support into their own products – notably Claude 3.5 (Sonnet) and Claude Desktop have native abilities to utilize MCP servers for fetching context. They position MCP as a secure way to keep data access within a user’s infrastructure (the MCP servers can run behind the firewall) while still empowering the AI assistant to use that data. The company’s advocacy has been crucial in framing MCP as a community-driven standard rather than a proprietary tool.

-

OpenAI: Rather than creating a competing standard, OpenAI has embraced MCP and integrated it into their tooling. OpenAI’s Agents SDK (a toolkit for building AI agents on top of models like GPT-4) introduced support for MCP, allowing developers to easily attach MCP servers as tools for GPT-based agents. The Agents SDK provides helper classes for connecting to both local (stdio) and remote (SSE) MCP servers, and even includes an example of using the official filesystem server via NPM. This means that an agent built with OpenAI’s framework can tap into the growing catalog of MCP-compatible tools out-of-the-box. It’s a significant endorsement: OpenAI’s involvement signals that MCP is not limited to Anthropic’s models, but is meant to be model-agnostic and interoperable. OpenAI had previously developed their own plugin system for ChatGPT (using OpenAPI specifications), but the convergence on MCP suggests a coalescing industry standard. In addition, the concept of function calling in OpenAI models complements MCP perfectly – where ChatGPT plugins required manual definitions, using MCP an OpenAI agent can dynamically discover available tools and invoke them in a standardized way. This integration by OpenAI has likely accelerated MCP adoption by making it accessible to the vast GPT developer community.

-

Microsoft: Microsoft is another major player driving MCP adoption, particularly in enterprise and productivity applications. In March 2025, Microsoft announced first-class MCP support in Microsoft Copilot Studio, which is their platform for enterprises to build and manage AI Copilots and agents. The integration allows developers (or “makers”) using Copilot Studio to add MCP-compatible AI apps and agents with a few clicks. Essentially, Copilot Studio can act as an MCP host – it can connect to external MCP servers representing knowledge bases or services, and automatically incorporate those as “actions” the Copilot can perform. Microsoft highlights that connecting an MCP server will auto-populate the agent with the server’s available actions/knowledge, and these will update as the server’s capabilities evolve. Microsoft has also integrated MCP with its existing enterprise connector infrastructure, meaning organizations can use MCP servers under the umbrella of Azure’s security and governance features (virtual network isolation, data loss prevention policies, authentication). This is critical for enterprise adoption: it lets companies expose internal systems to AI in a controlled, auditable manner. Microsoft’s embrace of MCP (and hosting a marketplace of pre-built MCP connectors in Copilot Studio) shows that MCP is being seen as the standard glue for AI-to-data integration in enterprise settings. Instead of reinventing a proprietary system, Microsoft is leveraging the open protocol – a strong sign of MCP’s momentum across the industry.

-

Hugging Face and Open-Source Community: The open-source AI community, including Hugging Face, has taken a keen interest in MCP. Initially the MCP announcement made a modest splash, but by early 2025 it surged in popularity, with community discussions suggesting MCP may even overtake earlier tool integration approaches like LangChain or OpenAI’s plugin API in mindshare. Hugging Face’s Turing Post blog published a detailed explainer, calling MCP a potential “game-changer for building agentic AI systems” as major AI players rally behind it. They emphasize that MCP is not a panacea by itself but an integration layer that complements agent frameworks (rather than replacing them). Notably, open-source projects have sprung up to bridge MCP with existing ecosystems – for example, community contributors have worked on packages to use MCP servers as tools within popular agent orchestration libraries like LangChain or smol AI agents. Hugging Face’s Transformers library has also explored agent tooling (such as Transformers Agents that allow an LLM to call other models or APIs), and while not directly MCP, these efforts align with the same goal of connecting models with external functionalities. It would not be surprising if Hugging Face’s tooling integrates MCP in the near future, given its surge in adoption. The community’s enthusiasm is evidenced by the rapid proliferation of guides, tutorials, and discussions on using MCP with local LLMs, integrating it into Retrieval-Augmented Generation pipelines, and even combining it with multi-agent systems. In short, open-source developers are actively extending MCP support and ensuring it works across various AI platforms and frameworks; a healthy sign for its longevity as a standard.

-

Other Notable Initiatives: A number of tech companies and startups are building on MCP to deliver solutions. Anthropic’s announcement mentioned early adopters like Block (formerly Square) and Apollo integrating MCP into their systems. Several developer-tool companies – Zed (a code editor), Replit, Codeium, and Sourcegraph – are working with MCP to enhance their AI-assisted coding features. By using MCP, these code assistants can securely fetch relevant context (e.g. your project files, git history, or documentation) to help the AI generate more accurate and context-aware code, with less guesswork. Another notable example is MindsDB, an open-source AI data integration platform. In April 2025 MindsDB announced comprehensive support for MCP across its platform, effectively turning MindsDB into a unified MCP data hub for enterprises. MindsDB’s MCP implementation lets an AI agent query dozens of databases and business applications through one federated interface, treating disparate data sources “as if they were a single database”. This kind of innovation shows that companies are not only adopting MCP, but also extending it – e.g. MindsDB added features like federated queries, security controls, and performance optimizations on top of the base MCP spec. The result is an enterprise-grade MCP server that can connect an AI to hundreds of data sources with one integration. All these initiatives, from big tech to startups, underscore a global trend: MCP is emerging as the de facto interface between AI and the rest of the tech stack, analogous to how standard protocols in the past (HTTP, ODBC, etc.) became ubiquitous bridges between systems.

Adoption in Industry and Use Cases

The rise of MCPs is closely tied to the explosion of industrial applications for generative AI. Organizations across domains – from software development to customer service – are eager to harness LLMs, but they need them to work with proprietary data and complex workflows. MCPs are increasingly the mechanism to achieve this integration in a scalable way. Below are key domains and examples of how MCP-driven generative AI is being used or piloted:

- Software Development and DevOps: AI coding assistants and developer tools are among the early adopters of MCP. By connecting to source code repositories, issue trackers, and build systems via MCP, an AI pair programmer can have full context of a project. For example, IDE plugins or cloud IDEs (like those from Replit or Sourcegraph) use MCP servers to let the AI fetch relevant code from the repository, query git history, or run test cases as needed. This means when a developer asks the AI *“What does this function do?”*or requests help implementing a feature, the AI can pull in the latest code context or documentation through MCP instead of relying solely on training data. Companies like Codeium (AI code autocomplete) similarly plan to use MCP to securely access corporate codebases and knowledge. The result is more accurate and helpful code generation with less hallucination, as the AI always has the option to get ground-truth context (for instance, retrieving an API specification from an internal wiki via MCP). DevOps assistants could likewise use MCP to interface with cloud platforms or monitoring tools – e.g. an AI agent that can fetch metrics, logs, or deployment statuses through standardized MCP connectors rather than custom scripts. This greatly reduces the burden of integrating AI into the software development lifecycle.

- Enterprise Data Integration and Automation: Many enterprises view MCP as a way to achieve a “single connectivity layer” between AI and their myriad internal systems. Instead of hard-coding an AI assistant to each database or SaaS API, companies can deploy MCP servers for their CRM, ERP, data warehouse, and so on, then have any AI agent access them via the common protocol. The earlier quote from Block’s CTO highlights this vision: MCP serves as an open bridge connecting AI to real-world applications, enabling agentic systems that take over mechanical tasks so humans can focus on creativity. A concrete example is an enterprise deploying an AI internal assistant that can answer questions by pulling data from both a SharePoint knowledge base and a SQL sales database – MCP would allow the assistant to query both seamlessly. Automation scenarios also benefit: Consider an AI agent handling an HR onboarding workflow. Via MCP, it could create an employee record in Workday, send a Slack message to IT, and update a Trello board, all in one conversational flow, with each action routed through the respective MCP connector. This uniformity reduces development effort (developers integrate once with MCP rather than N different APIs) and improves maintainability. Enterprises also appreciate that MCP servers can enforce security and compliance – e.g. an MCP connector to an email system can be set to disallow sending certain content – acting as a governed gateway. Microsoft’s integration of MCP into Copilot Studio’s connectors and the mention of virtual networks and DLP controls is evidence that enterprise IT requirements (security, monitoring, auditability) are being baked into MCP deployments, making CIOs more comfortable with AI agents having broader access. In short, MCP is powering enterprise automation by linking AI agents with internal tools under proper governance.

- Customer Support and Service: Generative AI chatbots for customer support are another use case where MCP is making inroads. A support chatbot can be far more effective if it can both retrieve information and take actions on behalf of the customer. Using MCP, a support AI could, for example, pull a customer’s order history from an e-commerce database, then also initiate a refund via a billing system connector – all during the chat session. Companies are exploring MCP to integrate AI assistants with CRM systems (like Salesforce), ticketing systems (like Zendesk or JIRA Service Management), and knowledge bases (like Confluence or corporate wikis). Rather than training a huge model on all FAQ articles (which may go out of date), the AI can query the knowledge base in real time for the latest answer. A case in point from a guide: an AI support agent can use MCP to interface with multiple LLMs or tools – for example, if one model is better at classification and another at drafting answers, the agent could coordinate them via MCP. Even without multiple models, MCP allows a support bot to escalate or log issues: it could create a support ticket in an ITSM tool or send a summary email to a human agent by calling the appropriate MCP server. Early adopters in the customer support realm include startups building AI concierge or troubleshooting bots that link to product databases and user account data through MCP servers (ensuring the AI always has the right info when helping a user). Overall, MCP is accelerating the deployment of AI in customer service by providing the needed connectors to enterprise customer data and the actions to resolve issues, all in a secure, standardized way.

- Engineering, Design, and Other Domains: Beyond software and IT, generative AI assistants are being prototyped in fields like engineering design, healthcare, finance, and more – and MCP is playing a role in connecting them to domain-specific tools. In engineering, for example, a product design AI might interface with a CAD system through an MCP server to pull component specifications or even to initiate simulations. This could enable an AI assistant that helps an engineer by fetching relevant CAD models or material properties on the fly. In architecture or construction, an AI agent could use MCP to query BIM (Building Information Model) databases or regulations database when answering a design question. In finance, where compliance is critical, MCP connectors could link an AI to up-to-date market data or internal risk models without exposing the core model to the raw data permanently. Even in healthcare, an AI doctor’s assistant could use MCP to retrieve a patient’s lab results from an EHR system (with proper permissions and auditing). These kinds of specialized integrations are being explored in pilot projects. One exciting frontier is robotics and IoT: researchers have noted that MCP could let AI agents embedded in smart environments access sensor data or send commands in a standardized way. For instance, a home assistant AI could call an MCP server to get thermostat readings or control a smart appliance. This would give AI “eyes and hands” in the physical world while keeping the interface uniform. Many of these applications are still in early stages, but they illustrate the broad versatility of MCP – any scenario where an AI needs to work across multiple systems or data streams is a candidate for MCP-based design.

Comparison of MCP and Related Approaches

Multiple approaches have been developed to connect AI models with external context and tools. The table below compares the Model Context Protocol with other notable frameworks and methods in use today, highlighting how they differ in design and adoption.

Sources: The concept of MCP as an open “lingua franca” for AI tools was introduced by Anthropic and is now supported by other major AI platforms. Prior to MCP, approaches like custom plugins and agent-specific tool sets were common, as seen with OpenAI’s plugin system and numerous agent frameworks. The table reflects the state as of early 2025, when MCP is surging in adoption and being integrated into many products, while older methods are evolving or converging towards this new standard.

Future Impact and Trends

The advent of Model Context Protocols is poised to significantly influence the future of generative AI in both technical capability and industry deployment. One major impact is moving AI systems from isolated “brains” toward integrated autonomous agents that can truly act on the world’s knowledge. With MCP or similar protocols, an AI model is no longer limited by a fixed context window or stale training data – it can continually pull in fresh context, whether it’s the latest financial report or a sensor reading a second ago. This makes AI responses more relevant and timely, a critical factor for applications like real-time decision support or monitoring. We’re likely to see future LLMs designed with an assumption that external tools are available, optimizing their prompts and architectures to use protocols like MCP efficiently.

Another anticipated impact is the rise of complex, multi-step AI workflows that execute end-to-end processes. Already, early users of MCP have noted the ease of orchestrating multi-step tasks across systems: e.g. an AI agent can plan an event by sequentially calling calendar, email, booking, and spreadsheet tools via MCP, maintaining context across all steps. In the near future, such cross-system agents could become common in business settings – automating entire workflows (with human oversight). This blurs the line between simple Q&A bots and true workflow automation bots, powered by generative AI reasoning plus MCP-based action. Importantly, MCP provides a clear audit trail for these actions (since each request/response is standardized), so organizations can log and review what the AI accessed or changed, helping to build trust in autonomous operations.

Collaboration and modularity will also be enhanced. MCP can enable agent societies – multiple AI agents with different specialties cooperating by sharing tools and context. For example, a “research” agent could use MCP to fetch information which it then passes to a “planner” agent, all mediated by agreed protocol messages. This could spur architectures where specialized models (including smaller fine-tuned models for specific skills) collectively solve problems, rather than one monolithic model. Each agent or tool can be upgraded independently. This modular AI ecosystem mirrors microservices in software: small components communicating via a standard protocol to achieve a larger goal. Such designs are inherently more scalable and maintainable, and MCP is a key enabler by acting as the common interface.

On the market side, if MCP* (or similar open protocols)* continue to gain adoption, we may witness a convergence of AI integration standards. Competing approaches often eventually converge (for instance, think of how HTTP became the universal standard for web communication). MCP’s rapid uptake by multiple vendors suggests it could become the default way AI models interface with external data. This would benefit the industry greatly: tool providers could implement an MCP server once and have it work with any AI agent, and AI platform providers can focus on improving models knowing that a rich library of tools is readily available. It lowers barriers for new entrants too – a startup with a novel AI service can plug into the existing MCP ecosystem to offer their service as a “tool”, immediately accessible to users of any MCP-aware AI assistant. In effect, MCP could create an AI plugin economy that is cross-platform. We’re already seeing early signs: Microsoft hosting a marketplace of MCP servers , and open-source communities creating hubs for MCP integrations.

In the coming years, expect more big tech companies and standards bodies to get involved. It wouldn’t be surprising if Google and others join the MCP standard or propose extensions to it (for example, adding OAuth authentication support – which Anthropic has indicated is on the roadmap  – to allow secure access to user data like emails or calendars through MCP). Standardization efforts may formalize MCP under an industry consortium if it continues to expand. There will likely be evolution of the protocol as well: support for streaming data, binary large data (for images or files), richer semantic descriptions of tools, etc., to cover more advanced use cases.

Finally, MCP’s impact on fine-tuning and model development is worth noting. As mentioned, if much of the specific knowledge can be offloaded to tools, developers might focus on fine-tuning models to be better decision-makers – knowing when to call a tool and how to interpret results – rather than packing domain facts into the model weights. This could lead to more efficient use of smaller models orchestrated by MCP, rather than chasing ever-larger monolithic models. On the other hand, the availability of massive context via MCP might drive model improvements in handling long dialogues and tool-rich prompts. We may see architectures like retrieval-augmented generation (RAG) blend with MCP-based tool use, where models both retrieve text chunks and call APIs in a unified way. The ultimate promise is more powerful, context-aware AI that can seamlessly integrate into our digital and physical world.

Conclusion

In summary, Model Context Protocols represent a pivotal development in generative AI’s evolution from clever text generators to truly useful assistants embedded in real-world tasks. Technically, MCP provides the standardized conduit for models to interact with any external data source or tool, bringing modularity and scalability to AI system design. The current state of MCP adoption is robust, championed by its creators at Anthropic and quickly embraced by OpenAI, Microsoft, and the open-source community, making it a rare instance of an open standard catching on in the notoriously fast-moving AI field. Industries worldwide are exploring MCP-powered AI solutions, from coding copilots to enterprise chatbots and beyond, reaping the benefits of easier integration and richer functionality. Looking ahead, the impact of MCPs on generative AI could be transformative: they pave the way for interoperable AI agents that can plug into the vast infrastructure of the digital world much like web browsers plug into the internet. While challenges remain (e.g. driving even wider adoption, ensuring security in tool use, and refining the protocol), the trajectory is clear. MCP and similar protocols are set to become an essential layer in the AI stack – the connective tissue that turns large language models into versatile, action-taking problem solvers. By standardizing context and tool access, MCPs are unlocking a future where generative AI is not an isolated model with a fixed knowledge cutoff, but a dynamic collaborator that can tap the collective knowledge and services of our entire tech ecosystem . The global tech industry’s embrace of MCPs signals a shared understanding that integration is the next frontier for AI, and working together on open protocols will accelerate innovation for everyone.

Sources

- Hopsworks AI Dictionary – What is Model Context Protocol? source

- Anthropic News – Introducing the Model Context Protocol (Nov 2024) source

- TechCrunch – OpenAI adopts Anthropic’s standard for connecting AI models to data (Mar 2025) source

- Microsoft Dev Blogs – C# SDK for MCP Announcement (Apr 2025) source

- Hugging Face Blog – MCP is All You Need: The Future of AI Interoperability source

- Hugging Face (Turing Post) – Why is Everyone Talking About MCP? source

- OpenAI Agents SDK Documentation – Model Context Protocol (MCP) Usage source

- Microsoft Tech Community – Integrating Azure OpenAI with MCP source

- Anthropic Documentation – Claude’s MCP Documentation source

- Community forums and tutorials (Medium, Reddit, Youtube) discussing MCP use cases (Various authors, 2025)